Neural networks learn by finding a way to link inputs to outputs1. This is key to understanding how they make decisions. The field of neural networks is booming, with Deep Learning expected to hit $190 billion by 20252. This growth opens up big opportunities for industries using neural networks.

It’s vital to grasp the basics of neural networks, including layers and activations. This knowledge helps build strong and efficient networks.

Neural networks, like RNNs, keep track of information over time1. This is important for tasks that need to see patterns over time. Layers and activations are key to moving information through these networks. With tools like JAX, we can make complex networks for many tasks, from images to language.

Key Takeaways

- Neural networks learn by mapping inputs to outputs1

- The expected impact of Deep Learning on the AI market is projected to reach $190 billion by 20252

- Neural network layers and neural network activations are critical for building robust networks

- RNNs keep track of information over time for tasks that need to see patterns1

- Libraries like JAX can help create complex neural networks for various tasks

- Intro to neural networks: layers and activations is essential for understanding neural network functionality

- Neural networks can be used for image classification, natural language processing, and more

Understanding the Foundations of Neural Networks

Neural networks are made to spot patterns in data. They have an input layer, hidden layers, and an output layer. This setup lets them handle big, complex datasets3.

The idea of artificial neural networks started in the 1940s. Deep neural networks are special because they have at least two hidden layers4.

When data goes through the network, it gets transformed at each layer4. The way weights are updated is decided by the optimizer. The number of training rounds can change based on the developer’s plan4.

Neural networks can predict future events using past data. This is useful for predicting hardware and health issues5.

Key parts of artificial neural networks are:

- Input layer: gets the data

- Hidden layers: do complex work on the data

- Output layer: makes the prediction

The backpropagation process has a forward and backward pass. It uses gradients to adjust weights3. Deep learning models need lots of data to work well, unlike some machine learning models3.

For more on artificial neural networks, check out this resource. Or see case studies on algorithmic thinking.

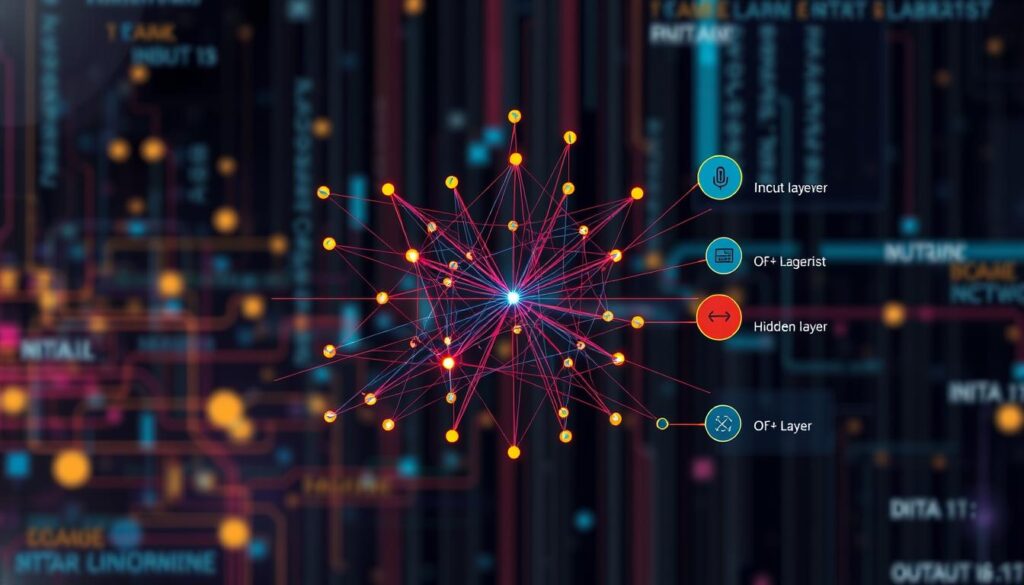

The Architecture of Neural Network Layers

Neural network layers are key to deep learning models. Their design is vital for success6. The input, hidden, and output layers work together. They help the network learn and decide6.

The input layer sends data without processing it. Hidden layers search for data features6. The output layer guesses results based on hidden layer outputs7.

Designing neural network layers helps information flow. Deep learning layers boost network performance8. Neural networks learn from data without rules. They get better with each update8.

Deeper architectures in the 2000s made neural networks more effective8. Key features include:

- Input layer: sends data without processing6

- Hidden layers: find data features6

- Output layer: guesses results based on probabilities7

- Deep learning layers: enhance network performance8

Knowing how neural network layers work is key to making effective deep learning models6. By designing layers for information flow, we can make models that learn and decide well7. For more on using algorithmic thinking in business, check out this link8.

Activation Functions: The Neural Network’s Power Source

Neural networks rely on neuron activations to learn complex patterns. The right activation function is key. It shapes the output of each layer and impacts the network’s performance. Without non-linear functions, networks can’t handle complex tasks.

Non-linear functions, like Sigmoid, Tanh, and ReLU, are vital. They help networks learn complex mappings. For example, they’re essential for image classification and natural language processing.

Linear vs Non-Linear Activation Functions

Linear functions are simple but can’t introduce non-linearity. Non-linear functions, though more complex, allow networks to learn complex relationships. The choice depends on the task and desired complexity.

Common Activation Functions Explained

Here are some common activation functions:

- Sigmoid: maps input values from the range (-∞, ∞) to (0, 1)9

- Tanh: maps inputs between -1 and 1, with a wider range than sigmoid9

- ReLU (Rectified Linear Unit): allows backpropagation and is non-linear10

Deep Dive into Neural Network Layer Types

Neural networks have many layers, like input, hidden, and output layers. These layers help deep learning layers understand complex data. The neural network structure is like the human brain, with each layer doing a special job. Research shows the input layer’s number of neurons matches the input data’s dimensions11.

The hidden layers are the brain of the network, doing most of the work. They can be one or more layers, and their complexity is mainly in these layers12. Each neuron has its own firing rules and weights, which can be different12. Activation functions like ReLU, Sigmoid, and Tanh are key for controlling neuron outputs and fit different data types12.

Some key traits of neural network layers include:

- Input layers: have neurons based on the input data’s size11

- Hidden layers: do most of the work, with different rules and weights12

- Output layers: turn the processed info into predictions or results11

Knowing about the different neural network layers and their roles is key for making good deep learning layers and a solid neural network structure. By understanding each layer’s strengths and weaknesses, developers can make neural networks that work better and are more accurate1112.

Intro to Neural Networks: Layers and Activations in Practice

Building a neural network requires understanding layer types and troubleshooting issues. The architecture is key to the network’s performance. The feedforward backpropagation algorithm is widely used in image classification, as noted in13.

Activation functions are essential in neural networks. They introduce non-linearity into neuron outputs. The Sigmoid function outputs values between 0 and 1, while ReLU outputs positive values and zero for negative inputs. Knowing these functions is critical for building a good neural network, as explained in14.

Choosing the right neural network architecture depends on the problem type. CNNs are great for image classification, while RNNs work well with sequences of inputs15. Selecting the correct architecture and activation functions is vital for creating efficient neural networks.

| Neural Network Type | Description |

|---|---|

| CNNs | Suitable for image classification tasks |

| RNNs | Suitable for tasks involving sequences of inputs |

By following these guidelines, developers can build effective neural networks. This approach leverages the power of neural network architecture and layers, as discussed in14.

Conclusion: Mastering Neural Network Fundamentals

Learning the basics of neural networks is key to making them work well. The neural network architecture and deep learning activations are vital. They help us tap into the full power of neural networks, making them more efficient and effective.

Using libraries like JAX makes it easier to work with neural networks. It lets us focus on the important parts of neural network learning. Core JAX is a strong but basic library16. This shows how important it is to know the basics of neural networks.

By getting good at neural network basics, we can make systems that solve complex problems. The right use of neural network architecture and deep learning activations is key. It can greatly improve how well a system works17.

FAQ

What are the basic components of artificial neural networks?

What is the role of neurons in network architecture?

What are the different types of neural network layers?

What is the importance of activation functions in neural networks?

How do I choose the right activation function for my neural network?

What are some common issues that arise during the training process of neural networks?

How can I implement different layer types in my neural network?

What are some tips and tricks for building and training effective neural networks?

Source Links

- A Primer on Current & Past Deep Learning Methods for NLP – https://ronak-k-bhatia.medium.com/a-primer-on-current-past-deep-learning-methods-for-nlp-c399fe28291d

- learning – https://github.com/Ishannaik/learningml

- Exploring Neural Networks: Basics to Advanced Applications | Tredence Insights – https://www.tredence.com/blog/neural-networks-guide-basic-to-advance

- Understanding the basics of Neural Networks (for beginners) – https://medium.com/geekculture/understanding-the-basics-of-neural-networks-for-beginners-9c26630d08

- A Beginner’s Guide to Neural Networks and Deep Learning – https://wiki.pathmind.com/neural-network

- The Essential Guide to Neural Network Architectures – https://www.v7labs.com/blog/neural-network-architectures-guide

- Introduction to Deep Neural Networks with layers Architecture Step by Step with Real Time Use cases – https://medium.com/analytics-vidhya/introduction-to-deep-neural-networks-with-layers-architecture-step-by-step-with-real-time-use-cases-ef544580687b

- What is a Neural Network? – GeeksforGeeks – https://www.geeksforgeeks.org/neural-networks-a-beginners-guide/

- Activation Functions Neural Networks: A Quick & Complete Guide – https://www.analyticsvidhya.com/blog/2021/04/activation-functions-and-their-derivatives-a-quick-complete-guide/

- Activation Functions in Neural Networks [12 Types & Use Cases] – https://www.v7labs.com/blog/neural-networks-activation-functions

- Deep Dive into Neural Networks – https://medium.com/@nerminhafeez2002/deep-dive-into-neural-networks-192f2ddc2d46

- Deep Learning 101: Beginners Guide to Neural Network – https://www.analyticsvidhya.com/blog/2021/03/basics-of-neural-network/

- Neural Network Basics – http://www.webpages.ttu.edu/dleverin/neural_network/neural_networks.html

- A Quick Introduction to Neural Networks – https://ujjwalkarn.me/2016/08/09/quick-intro-neural-networks/

- A Gentle Introduction To Neural Networks Series — Part 1 – https://towardsdatascience.com/a-gentle-introduction-to-neural-networks-series-part-1-2b90b87795bc

- Introduction to Neural Networks – PyImageSearch – https://pyimagesearch.com/2021/05/06/introduction-to-neural-networks/

- Introduction to Neural Network in Machine Learning – https://www.analyticsvidhya.com/blog/2022/01/introduction-to-neural-networks/